|

Junliang Ye | 叶俊良 I am a third-year master's student in the Department of Computer Science at Tsinghua University , advised by Prof. Jun Zhu. In 2022, I obtained my B.S. in the School of Mathematical Sciences at Peking University. My research interests lie in computer vision (e.g., 3D AIGC and video generation), multimodal large models (e.g., native large models), and reinforcement learning from human feedback (DPO, GRPO). My email: yejl23@mails.tsinghua.edu.cn Email / CV / Google Scholar / Github |

|

- 2026-02: One paper on 3D-UMM is accepted by CVPR 2026 Findings.

- 2026-02: One paper on Video Generation is accepted by TIP 2026.

- 2026-01: Two papers on 3D-MLLM and 3D-Editing are accepted by ICLR 2026.

- 2025-09: One papers on 3D-UMM are accepted by NeurIPS 2025.

- 2025-09: One papers on 3D Generation are accepted by TPAMI 2025.

- 2025-06: One papers on 3D Generation are accepted by ICCV 2025.

- 2024-07: Two papers on 3D & 4D Generation are accepted by ECCV 2024.

Preprints

*equal contribution †Project leader

|

Junliang Ye*, Ruowen Zhao*, Zhengyi Wang*, Yikai Wang, Jun Zhu Arxiv, 2025 [arXiv] [Code] [Project Page]

We propose DeepMesh-v2, which generates meshes with intricate details and precise topology, surpassing state-of-the-art methods in both precision and quality. |

Selected Research

*equal contribution †Project leader

|

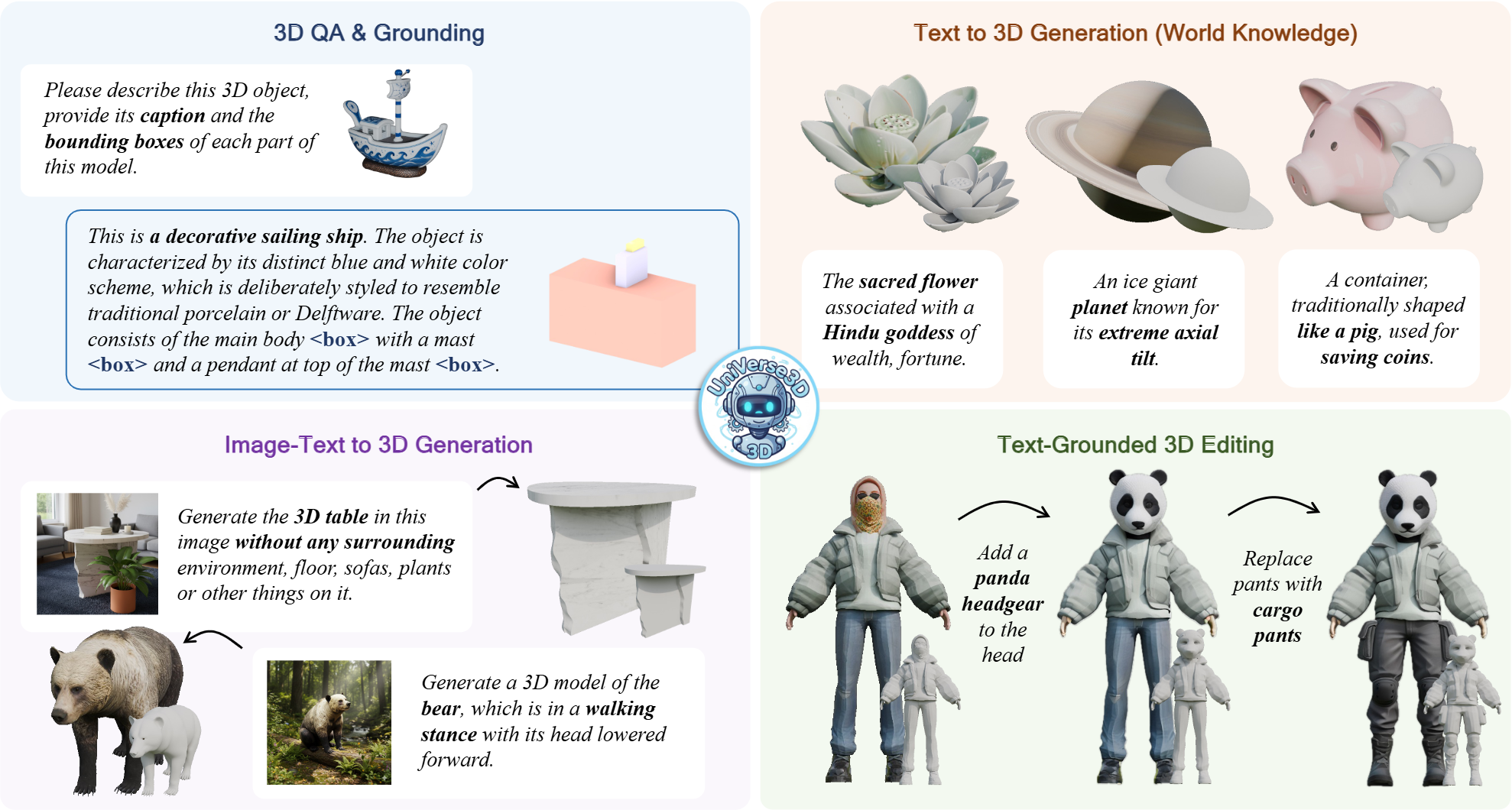

Junliang Ye*, Zehuan Huang*, Yansong Qu*, Chunshi Wang, Yunhan Yang, Yang Li, et. al Conference on Computer Vision and Pattern Recognition (CVPR), 2026 (Findings, top 36%) [arXiv] [Code] [Project Page] We propose UniVerse3D, the first 3D Unified multimodal models. |

|

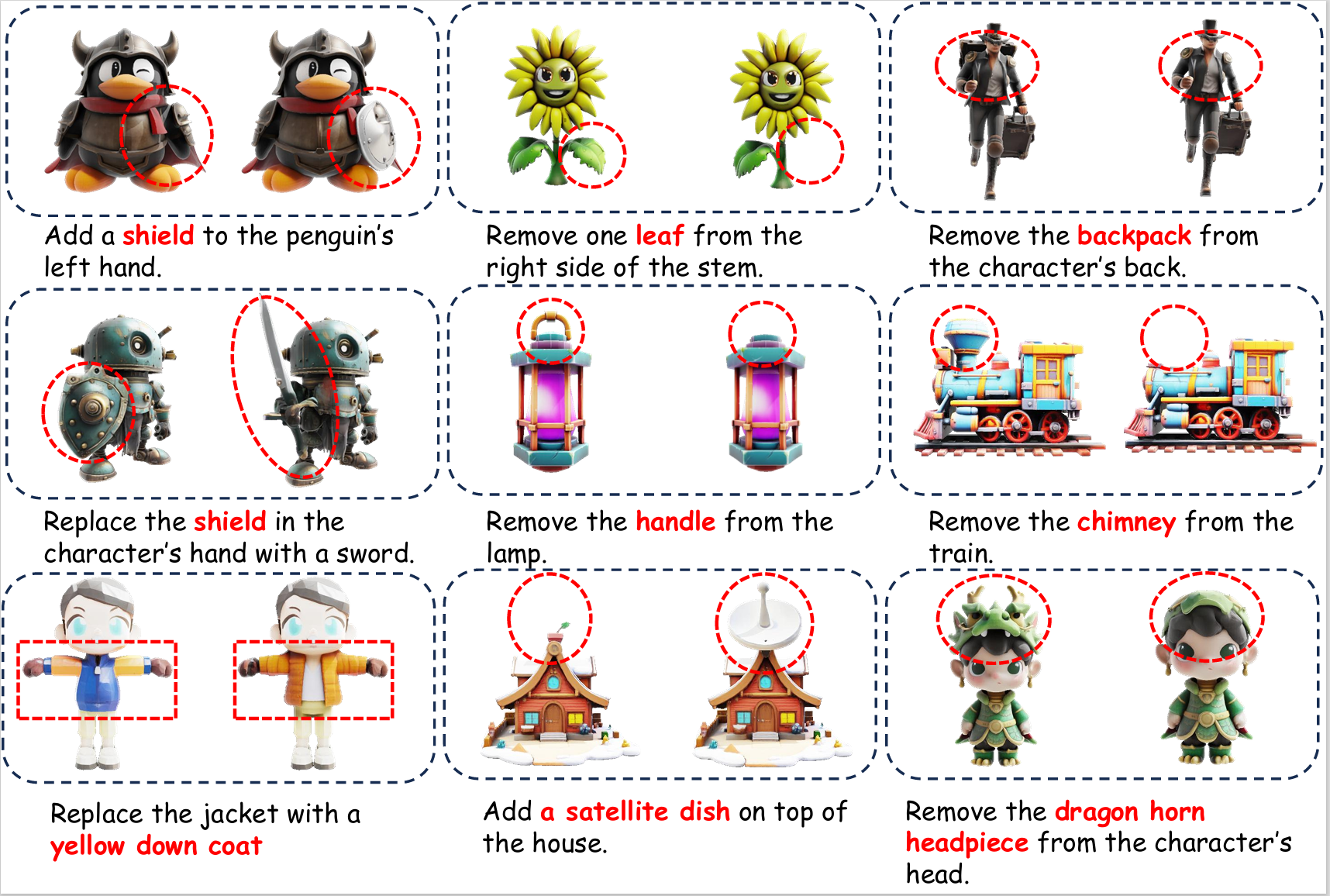

Junliang Ye*, Shenghao Xie*, Ruowen Zhao, Zhengyi Wang, Hongyu Yan, Wenqiang Zu, et. al International Conference on Learning Representations (ICLR), 2026 [arXiv] [Code] [Project Page]

We propose Nano3D, a training-free framework for precise and coherent 3D object editing without masks. |

|

Chunshi Wang*, Junliang Ye†*, Yunhan Yang*, Yang Li, Zizhuo Lin, Jun Zhu, et. al International Conference on Learning Representations (ICLR), 2026 [arXiv] [Code] [Project Page]

We introduce Part-X-MLLM, a native 3D multimodal large language model that unifies diverse 3D tasks by formulating them as programs in a structured, executable grammar. |

|

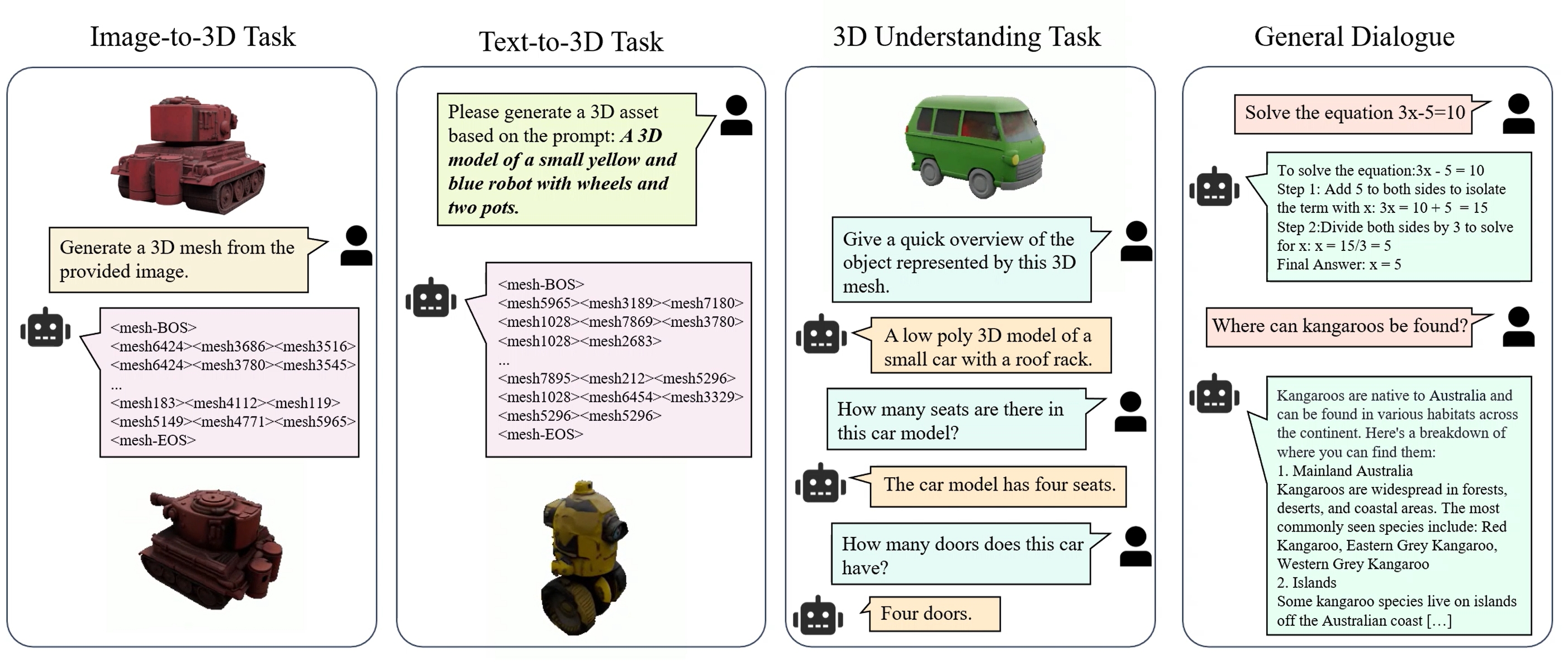

Junliang Ye*, Zhengyi Wang*, Ruowen Zhao*, Shenghao Xie, Jun Zhu Conference on Neural Information Processing Systems (NeurIPS), 2025 (Spotlight, top 3.2%) [arXiv] [Code] [Project Page]

We propose ShapeLLM-Omni, a multimodal large model that integrates 3D generation, understanding, and editing capabilities. |

|

Ruowen Zhao*, Junliang Ye*, Zhengyi Wang*, Guangce Liu, Yiwen Chen, Yikai Wang, et. al IEEE International Conference on Computer Vision (ICCV), 2025 [arXiv] [Code] [Project Page]

We propose DeepMesh, which generates meshes with intricate details and precise topology, surpassing state-of-the-art methods in both precision and quality. |

|

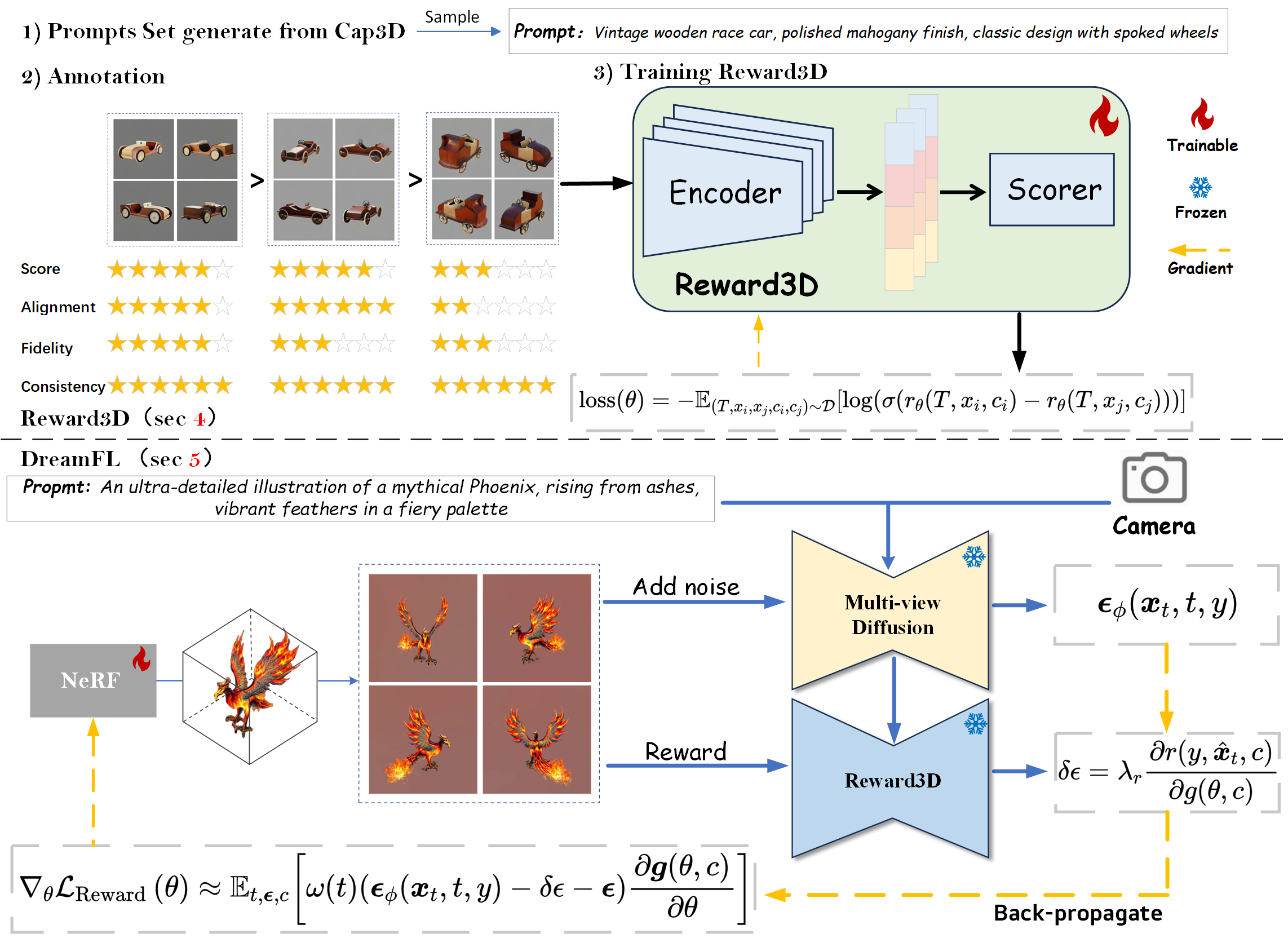

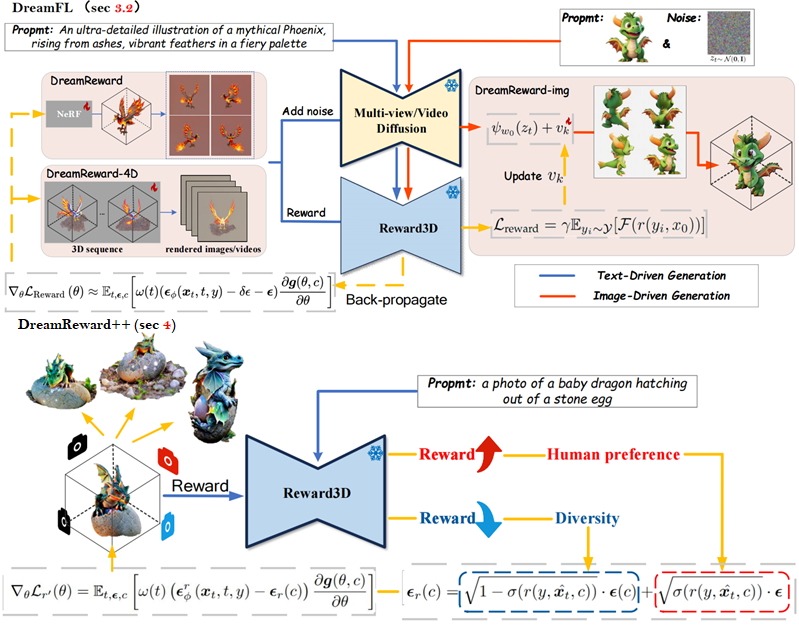

Junliang Ye*, Fangfu Liu*, Qixiu Li, Zhengyi Wang, Yikai Wang, Xinzhou Wang, et. al European Conference on Computer Vision (ECCV), 2024 [arXiv] [Code] [Project Page]

We present a comprehensive framework, coined DreamReward, to learn and improve text-to-3D models from human preference feedback. |

Additional Projects

|

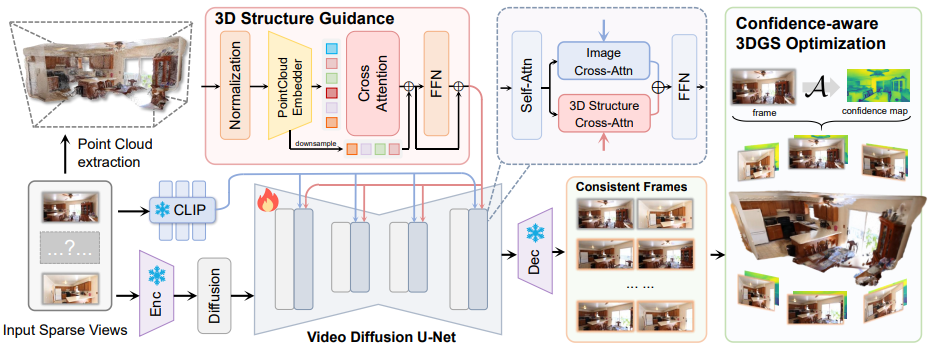

Fangfu Liu*, Wenqiang Sun*, Hanyang Wang*, Yikai Wang, Haowen Sun, Junliang Ye, et. al IEEE Transactions on Image Processing (TIP), 2026 [arXiv] [Code] [Project Page]

In this paper, we propose ReconX, a novel 3D scene reconstruction paradigm that reframes the ambiguous reconstruction challenge as a temporal generation task. |

|

Fangfu Liu, Junliang Ye, Hanyang Wang, Zhengyi Wang, Jun Zhu, Yueqi Duan IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2025 [arXiv] [Code] [Project Page]

We present a comprehensive framework, coined DreamReward++, where we introduce a reward-aware noise sampling strategy. |

|

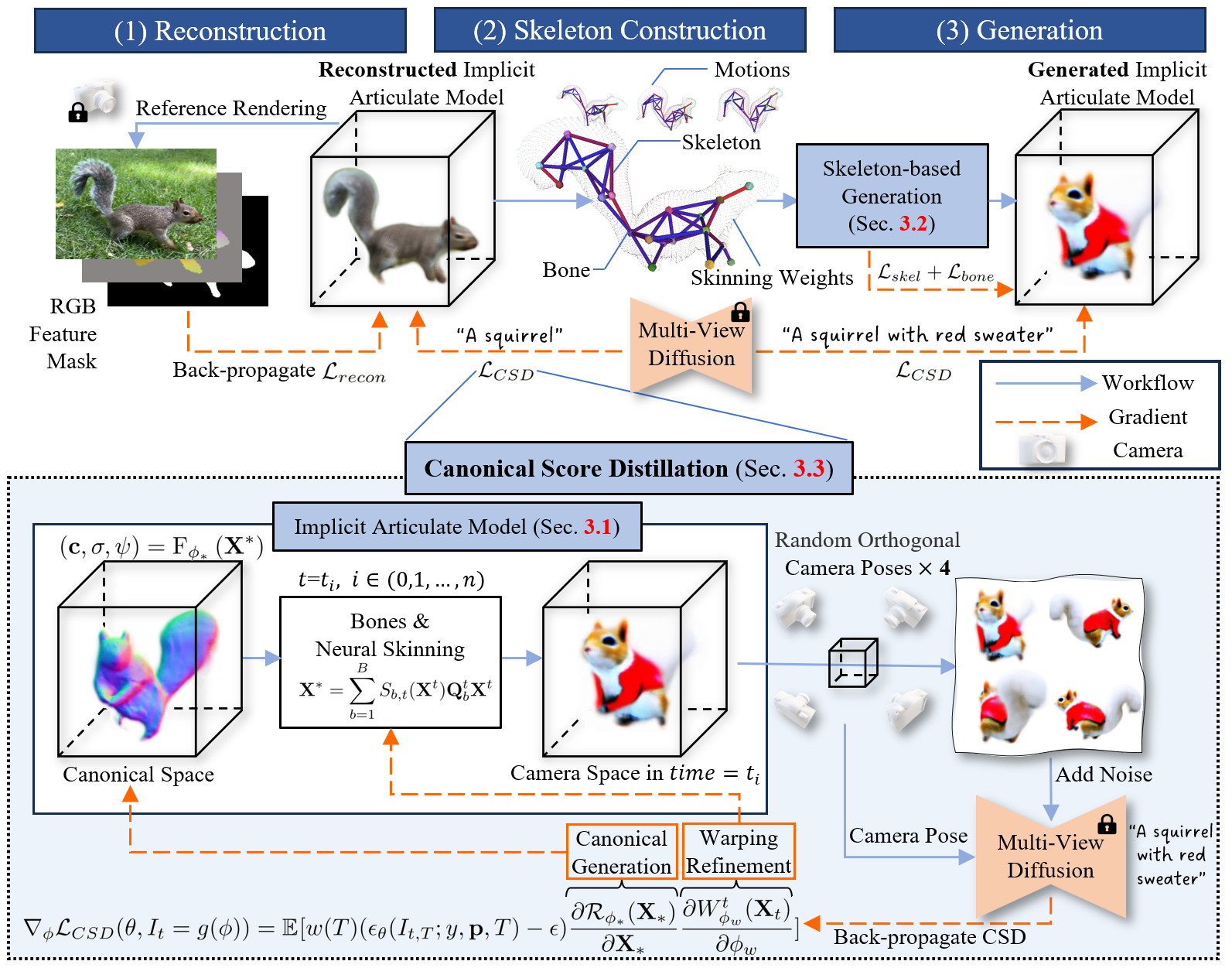

Xinzhou Wang, Yikai Wang, Junliang Ye, Fucun Sun, Zhengyi Wang, Ling Wang, et. al European Conference on Computer Vision (ECCV), 2024 [arXiv] [Code] [Project Page]

We propose ANIMATABLEDREAMER, a framework with the capability to generate generic categories of non-rigid 3D models. |

|

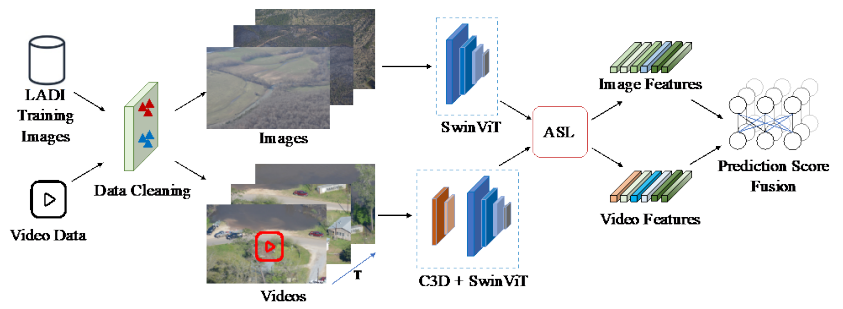

Yanzhe Chen, HsiaoYuan Hsu, Junliang Ye, Zhiwen Yang, Zishuo Wang, Xiangteng He, Yuxin Peng Virtual, Online [arXiv] [Code] [Project Page] We achieved first place in the TRECVID 2022 competition. |

- Review for CVPR 2023, ICLR 2026.